Deep Fake Videos: Technology, Applications and Limits

Technology aimed at deep fake is one more tool in a change that we have already been experiencing for years. At Vicomtech, when a development proposal for Deep Fake videos or voices arises, we apply our code of ethics and carefully analyze the origin of the request, the sector to which the application is directed and the consequences of its implementation. Do you want to know more? Discover through this post the bases of Deep Fake, its applications and its limits!

01.02.2021

To make a Deep Fake video, two videos are needed, one for the person to be impersonated and the other for the person impersonated.

The automatic process of generating a Deep Fake consists of using an Adversarial Generative Network (GAN) based on Artificial Intelligence trained with millions of images of faces. In this Neural Network, a training of the faces of the 2 videos is carried out, making adjustments for each iteration. In the network there are the generative and the adversarial part. The generative creates the Deep Fake and the adversarial decides if that face is fake or real. When the adversarial system is deceived by the generative, the face is supplanted in the video frame by frame.

As normally the result of Deep Fake tends to have small flaws, photographic retouching techniques are applied for each frame.

Ethical implications and responsibility. Risk of misinformation

With technologies aimed at Deep Fake, whether to generate videos or audios, special care must be taken due to the realism that they provide to the applications. Every day technology is more advanced and more real results are achieved; therefore, the danger of misinformation, confusion, and impersonation grows.

At Vicomtech we are committed to taking advantage of the opportunities offered by technological advances, but special attention must always be paid to the use made of them, and in this specific case, of the Deep Fake videos generated. Generating a video for an advertising campaign is not the same as generating a video of a public person transmitting certain messages that can have a very deep impact on the general population.

However, we consider that it is one more tool in a change that we have already been experiencing for years and we must adapt. At Vicomtech, when a development proposal for Deep Fake videos or voices arises, we apply our code of ethics and carefully analyze the origin of the request, the sector to which the application is directed and the consequences of its implementation.

Although the development of the technologies themselves has no limits, they do, and it is an issue that must be analyzed very well, the specific applications of these technologies and their scope of transfer. A clear path is the commitment to education in critical spirit and regulation, combining it with artificial intelligence tools that identify when a video is real or not.

At Vicomtech we also promote the research and development of this type of tools that help analyze videos and validate both their origin and their veracity before being published, when necessary.

Market Opportunities for Deep Fake Videos and Future Applications

There are two market aspects for this type of solution. On the one hand, there is a demand for digital content to support advertising and marketing campaigns in general. Second, there are corporate communication strategies. In general, what is requested is the development of new applications equipped with high technological content with or without animation that impact the target audience.

Technology has been helping to find a good balance of automation of communications for many years, maintaining a high level of personalization and there is still a long way to go. For example, many of the "software bots" that currently speak or write to us, in the near future will probably have their own "face" as well.

A third application that is also being presented is related to privacy, that is, using technology to preserve people's privacy in certain forums.

It is clear that realism is going to increase and technology is going to be more and more accessible in the market, which will contribute to multiplying the possibilities of application.

At Vicomtech, after generating Franco's synthetic voice for the X-Rey podcast, requests of various kinds for the application of these technologies are being received; for example, of people with diseases that involve voice impairment and want to ensure that in the future they will be able to communicate with their loved ones with their current voice. This gives an idea of the scope and the infinity of applications and sectors to which these technologies are directed.

Some tips to detect a Deep Fake

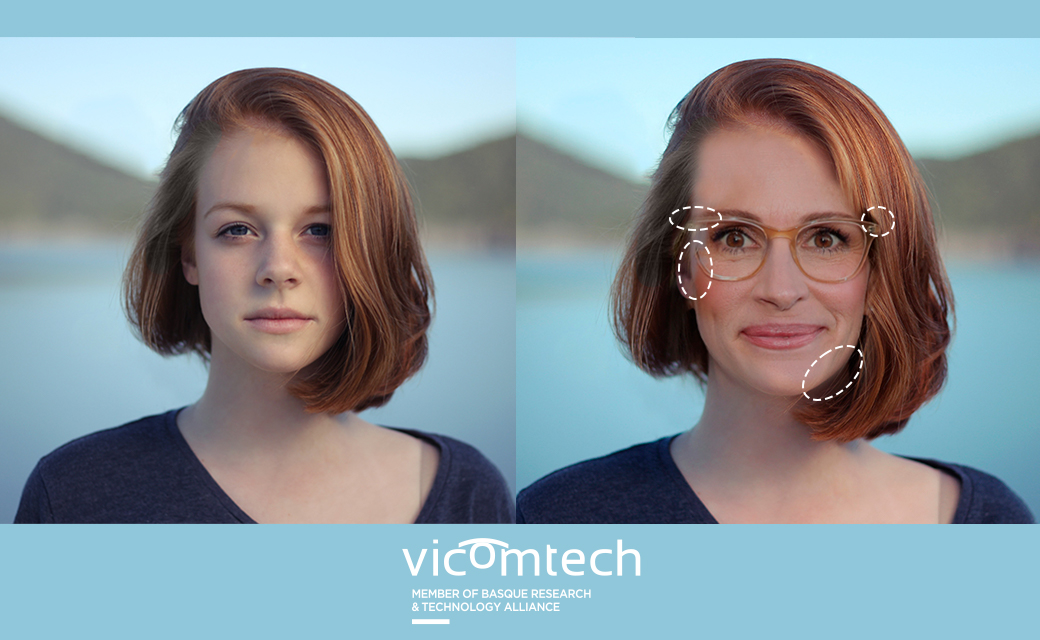

Here are some practical tips for detecting a Deep Fake.

- Facial expressions: Are facial expressions natural? Do the lips move correctly in coordination with the voice? Are the cheeks deformed correctly? Do forehead wrinkles move according to facial expressions?

- Shadows: in the eyes and eyebrows, the generative system generates faces with patterns but is not able to understand what a shadow consists of, therefore these anomalies are visible if one looks.

- Glasses: the reflection of the glasses often generates certain brightness in the image that makes the result unnatural.

- Blink: the person blinks an average of 20 times per minute, in the DeepFakes generation algorithm, this is not taken into account.